December 26, 2024

Setting Up Approvals in SharePoint Lists: A step-by-step Guide

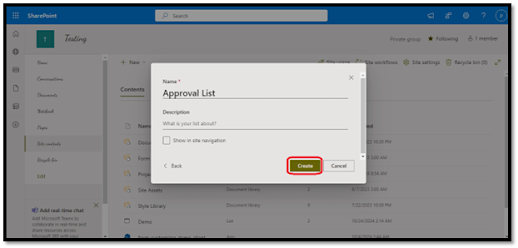

1. Create a New List:

b. Click "Add a list" and select "Blank List".

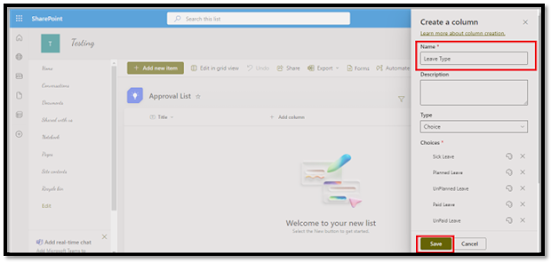

d. Create necessary columns (e.g. "Leave Type", "Description", "Start Date", "End Date").

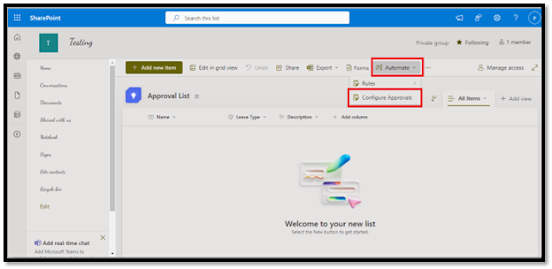

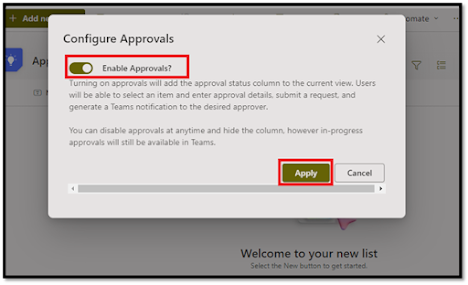

a. In the list ribbon, click "Automate" and then "Configure Approvals".

b. Toggle the "Enable Approvals" switch on and click on "Apply".

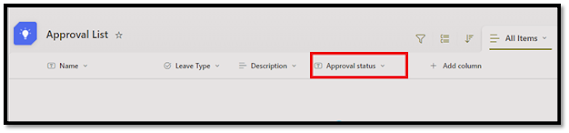

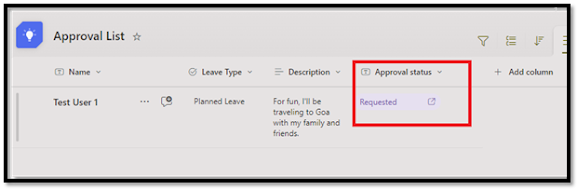

c. This action automatically adds an Approval Status column to your list, tracking the stage of each approval.

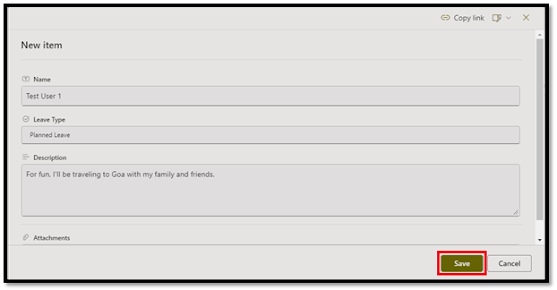

b. Fill in the required details (e.g. leave type, description).

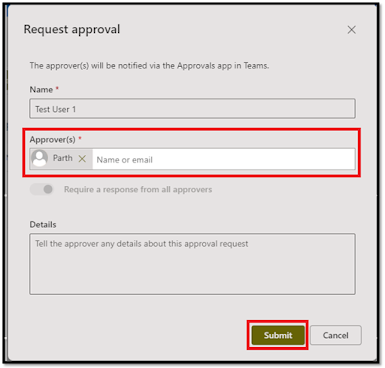

b. In the pop-up, select the Approver's Name(s) using the People picker and Add any additional comments or details, then click "Submit" to send the item for approval.

a. After a few minutes, the Approval Status will be changed from Not Submitted to Requested.

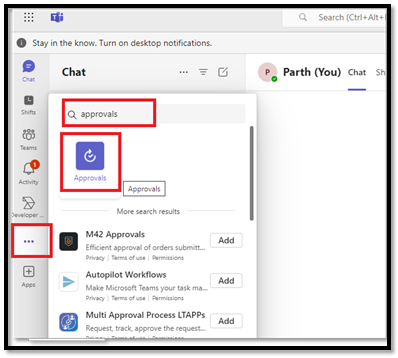

b. Open Teams, click on the three dots(...) search for Approvals, and click Add.

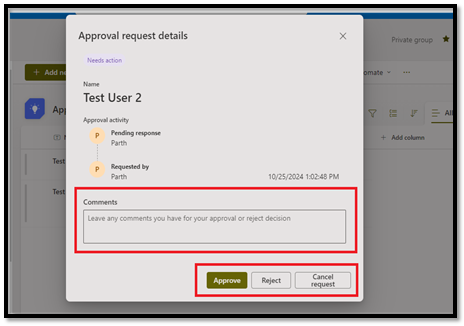

a. When an item is submitted, the status changes to Requested. At this stage, approvers can view the item's approval history and choose from several actions:

b. Approval using SharePoint:

i. Approve: Click he "Approve" button to approve the request.

iii. Cancel Request: Withdraws the request, resetting the Approval Status to No Submitted.

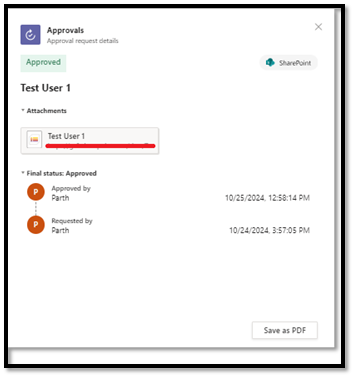

a. Each item in the list retains a history of actions, which includes who approved, rejected, or reassigned the item.

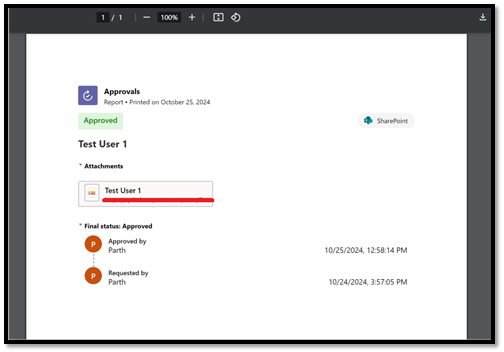

a. Once a request is completed, you can save the approval record as a PDF for documentation purposes.

- Initial Status: "Not Submitted"

- Enhanced Control: Centralized management of approvals.

- Limited Customization: Basic approval workflow may not suit complex scenarios.

- Clear and Concise Descriptions: Provide detailed explanations for approval requests.

November 14, 2024

Level-up your .NET Skills: Automate, Validate, and Secure your Code

In the .NET ecosystem, there are many libraries that simplify common tasks, making the development process smoother and more efficient. In this post, we will explore three libraries that are indispensable in modern .NET applications: Automapper, FluentValidation, and BCrypt.Net. These libraries help with data mapping, validation, and security, respectively.

1. Automapper: Simplifying Object Mappings

Automapper is a library that eliminates the need to manually map properties from one object to another. This is especially helpful when dealing with Data Transfer Objects (DTOs) or ViewModels, where the structure may differ from the domain entities.

Problem:

Consider a scenario where you have a User entity with a lot of fields, but you only need a subset of those fields to be sent in an API response. Manually copying each property from the entity to a DTO can become tedious and error-prone.

Solution with Automapper:

Automapper provides a streamlined approach to map these objects.

Step-by-Step Example:

1. Define your domain model (User) and DTO (UserDTO):

public class User

{

public int Id { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public string Email { get; set; }

public string PasswordHash { get; set; }

public DateTime DateOfBirth { get; set; }

}

public class UserDTO

{

public int Id { get; set; }

public string FullName { get; set; }

public string Email { get; set; }

}

public class User

{

public int Id { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public string Email { get; set; }

public string PasswordHash { get; set; }

public DateTime DateOfBirth { get; set; }

}

public class UserDTO

{

public int Id { get; set; }

public string FullName { get; set; }

public string Email { get; set; }

}2. Create an Automapper Profile to define the mapping:

using AutoMapper;

public class UserProfile : Profile

{

public UserProfile()

{

CreateMap<User, UserDTO>()

.ForMember(dest => dest.FullName, opt => opt.MapFrom(src => $"{src.FirstName} {src.LastName}"));

}

}public class Startup

{

public void ConfigureServices(IServiceCollection services)

{

services.AddAutoMapper(typeof(Startup));

}

}

4. Use Automapper in your application:

public class UserController : ControllerBase

{

private readonly IMapper _mapper;

public UserController(IMapper mapper)

{

_mapper = mapper;

}

[HttpGet("{id}")]

public ActionResult<UserDTO> GetUser(int id)

{

var user = _dbContext.Users.Find(id);

if (user == null) return NotFound();

// Map User to UserDTO

var userDto = _mapper.Map<UserDTO>(user);

return Ok(userDto);

}

}

Explanation:

-

The User object is retrieved from the database.

-

The IMapper.Map method is used to convert the User object to a UserDTO object, with minimal effort.

2. FluentValidation: Clean and Readable Model Validation

FluentValidation simplifies model validation by allowing developers to write validation logic in a fluent, expressive syntax, keeping the validation logic separate from the model itself.

Problem:

Manually validating model fields (e.g., ensuring required fields are filled, data formats are correct, etc.) often leads to messy and repetitive code.

Solution with FluentValidation:

FluentValidation provides a cleaner way to handle validations with reusable, strongly-typed rules.

Step-by-Step Example:

1. Define your model (User):

public class User

{

public string Email { get; set; }

public string Password { get; set; }

public DateTime DateOfBirth { get; set; }

}

2. Create a Validator class for the model:

using FluentValidation;

public class UserValidator : AbstractValidator<User>

{

public UserValidator()

{

RuleFor(user => user.Email)

.NotEmpty().WithMessage("Email is required.")

.EmailAddress().WithMessage("A valid email is required.");

RuleFor(user => user.Password)

.NotEmpty().WithMessage("Password is required.")

.MinimumLength(8).WithMessage("Password must be at least 8 characters long.");

RuleFor(user => user.DateOfBirth)

.NotEmpty().WithMessage("Date of birth is required.")

.Must(BeAtLeast18).WithMessage("You must be at least 18 years old.");

}

private bool BeAtLeast18(DateTime dateOfBirth)

{

return dateOfBirth <= DateTime.Now.AddYears(-18);

}

}3. Use FluentValidation in your Controller or Service:

public class UserController : ControllerBase

{

private readonly IValidator<User> _validator;

public UserController(IValidator<User> validator)

{

_validator = validator;

}

[HttpPost]

public IActionResult Register(User user)

{

var validationResult = _validator.Validate(user);

if (!validationResult.IsValid)

{

return BadRequest(validationResult.Errors);

}

// Proceed with registration logic

return Ok();

}

}Explanation:

The UserValidator class defines validation rules for the User model.

The RuleFor method is used to apply specific validation rules for each property, with a custom rule for checking the age. The Validate method checks if the model is valid, and any errors are returned as a response.

3. BCrypt.Net: Securing User Passwords

BCrypt.Net is a library for hashing passwords securely. Passwords should never be stored in plain text, and BCrypt helps ensure password security with hashing and salting.

Problem:

Storing passwords as plain text in databases makes user accounts vulnerable to data breaches and attacks.

Solution with BCrypt.Net:

BCrypt is widely regarded as a secure way to hash passwords, incorporating salt to protect against rainbow table attacks.

Step-by-Step Example:

1. Install BCrypt.Net:

dotnet add package BCrypt.Net-Next

2. Hash a password before storing it:

public class UserService

{

public string HashPassword(string password)

{

return BCrypt.Net.BCrypt.HashPassword(password);

}

public bool VerifyPassword(string password, string hash)

{

return BCrypt.Net.BCrypt.Verify(password, hash);

}

}

3. Use BCrypt in your registration and login logic:

public class UserController : ControllerBase

{

private readonly UserService _userService;

public UserController(UserService userService)

{

_userService = userService;

}

[HttpPost("register")]

public IActionResult Register(string password)

{

var hashedPassword = _userService.HashPassword(password);

// Save hashedPassword to the database (omitted for brevity)

return Ok("User registered successfully.");

}

[HttpPost("login")]

public IActionResult Login(string password, string storedHash)

{

if (_userService.VerifyPassword(password, storedHash))

{

return Ok("Login successful.");

}

return Unauthorized("Invalid password.");

}

}Explanation:

- HashPassword is used to hash the user's password before storing it in the database.

- During login, VerifyPassword checks whether the entered password matches the stored hash, ensuring secure authentication.

Conclusion

These libraries - Automapper, FluentValidation, and BCrypt.Net—offer solutions to common problems encountered in .NET development. By using them, you can focus on writing cleaner, more maintainable, and secure code while relying on well-tested solutions for routine tasks.

If you have any questions you can reach out our SharePoint Consulting team here.

October 15, 2024

How to Set Up SharePoint Brand Center: A Step-by-Step Guide

- One Brand Center per Organization: SharePoint currently allows only one Brand Center for each tenant. All branding assets and font management will be centralized in one location.

- Global Administrator Setup: Global Administrator privileges are necessary to activate the Brand Center, ensuring appropriate configuration and management of the organization's brand identity.

5. Finalize Setup: Click Create site. Once complete, links to the Brand Center site and app will be generated. Copy the necessary link and sign in to access the app.

|

2. Open the Brand Center App: Click on Settings and select Brand Center (preview). Once inside the app, click Add Fonts.

- True Type fonts (.ttf)

- Open Type fonts (.otf)

- Web Open Format Font (.woff)

- Web Open Format Font (.woff2)

If you have any questions you can reach out our SharePoint Consulting team here.

September 26, 2024

Mastering SQL: 9 Best Practices for Writing Efficient Queries

When writing SQL queries, it's a good practice to capitalize all SQL keywords such as SELECT, WHERE, JOIN, etc. This simple step improves readability, making it easier for other developers to quickly understand the structure and logic of your query.

Aliases provide a shorthand for table names, making your queries easier to read, especially when dealing with long or multiple table names. Aliases also help prevent ambiguity when columns from different tables share the same name.

e and d are aliases that make the query shorter and cleaner.SELECT *; Always Specify Columns in SELECT ClauseUsing SELECT * might seem convenient, but it fetches all columns, which can be inefficient, especially in tables with many columns. Instead, explicitly specify the columns you need to reduce unnecessary data retrieval and enhance performance.

While it's important to comment on complex logic to explain your thought process, over-commenting can clutter the query. Focus on adding comments only when necessary, such as when the logic might not be immediately clear to others.

5. Use Joins Instead of Subqueries for Better Performance

Joins are often more efficient than subqueries because they avoid the need to process multiple queries. Subqueries can slow down performance, especially with large datasets.

6. Create CTEs Instead of Multiple Subqueries for Better Readability

Common Table Expressions (CTEs) make your queries easier to read and debug, especially when working with complex queries. CTEs provide a temporary result set that you can reference in subsequent queries, improving both readability and maintainability.

Using the JOIN keyword makes your SQL queries more readable and semantically clear, rather than placing join conditions in the WHERE clause.

Using ORDER BY in subqueries can cause unnecessary performance hits since the sorting is often unnecessary at that point. Instead, apply ORDER BY only when absolutely needed in the final result set.

9. Use UNION ALL Instead of UNION When You Know There Are No Duplicates

The UNION operator removes duplicates by default, which adds overhead to your query. If you're certain there are no duplicates, using UNION ALL can greatly improve performance by skipping the duplicate-checking step.

By following these best practices, you’ll be able to write queries that run faster, are easier to understand, and scale well as your database grows. Small optimizations like avoiding SELECT *, using proper joins, and leveraging CTEs can make a big difference in the long run.

Happy querying❗

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)