Introduction:

Problem:

We encountered a scenario where we needed to create a separate Excel file with only the header structure from an existing file containing data. Unfortunately, Power Automate's Excel connector does not provide an out-of-the-box action to duplicate or copy a file structure.

Solution:

To address this challenge, we have developed a Power Automate flow that operates on an Excel file and generates another Excel file with only the header structure. These steps are applicable to any type of Excel file, regardless of the header structure. Hence, we can pass a dynamic header structure to the output file. Let's dive into the steps to achieve this:

Step 1:

First, we add the "For a selected file" trigger to initiate our flow. This trigger allows us to activate the flow when a specific file or item is selected within a SharePoint list or library.

In this step, we use the "Get table" action to fetch the table name from the existing parent Excel file. By using an expression to retrieve the file name dynamically, we ensure that the "Get table" action fetches the table name from the correct parent Excel file.

Next, we include the "List rows present in a table" action to fetch all the data from the Excel table. This action retrieves all the rows present within the specified table in the Excel file. We can dynamically set the "Table" value by retrieving the 'id' value from the first table obtained in the response of the "Get tables" action.

first(outputs('Get_tables')?['body/value'])['id']

Step 4:

We will add the "Compose" action and include the following expression:

first(outputs('List_rows_present_in_a_table')?['body/value'])['id']

This expression retrieves the first row of data from the output of the "List rows present in a table" action. By capturing the data from the first row using this expression within the "Compose" action, we store it for further processing.

Step 5:

Once we have obtained the output from the "Compose" action, we notice that it includes some unwanted properties such as "@odata.etag" and "ItemInternalId" above the actual data rows. To extract only the column names from the JSON object, we need to remove these unwanted properties.

The "From" value should be set to the following expression:

json(replace(string(outputs('Compose_5')), '@odata.', 'odata')) Insert the following expression as the input value:

removeProperty(removeProperty(item(), 'ItemInternalId'), 'odataetag')

This expression removes the "ItemInternalId" and "odataetag" properties from each item in the JSON array.

Clicking on the "Next step" button, we choose the "Create CSV file" action. In the "From" field of the "Create CSV file" action, we add the output of the previous "Select" action. This ensures that we capture the modified JSON object with the desired column names and associated values.

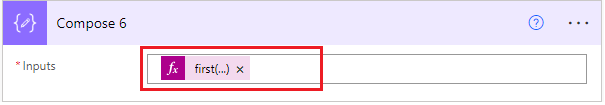

Step 7:

In this step, we utilize the "Compose" action to process the CSV table created in the previous step. Add the following expression in the "Inputs" field of the "Compose" action:

first(split(body('Create_CSV_table'), decodeUriComponent('%0D%0A')))

This expression splits the body of the "Create_CSV_table" action by the line break ("%0D%0A") and retrieves the first element. This allows us to isolate the header row of the CSV file, which consists of the column names. This allows us to isolate the header row of the CSV file, which consists of the column names.

Here, we have obtained the desired output, which includes the column names that will be utilized in our "Create table" action.

We add the "Create file" action to generate a blank Excel file. In the "File content" field of the "Create file" action, we enter a space to create a blank file.

Step 9:

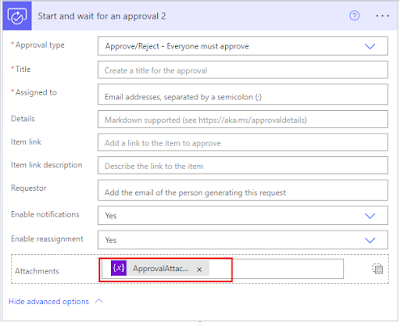

To create a table in the blank Excel file and include the column names from the "Compose" action in Step 7, we follow these steps:

In the "Column names" field of the "Create table" action, we enter the output of the "Compose" action from Step 7, which is "Compose 6".

By including the column names from the "Compose" action, we ensure that the table created in the Excel file will have the correct column headers.

Finally, we save and run the flow manually. You can see the flow generating a new Excel file with the exact same table structure as the parent file.

Conclusion:

This blog presented a straightforward method to dynamically create a new Excel file with the same header structure as the parent table, without any data. By leveraging Power Automate Flow and SharePoint, we achieved this outcome seamlessly.

If you have any questions you can reach out our SharePoint Consulting team here.